Big data technologies go hand in hand with scientific, research, and commercial activities. They cover all areas where it is required to implement more and more efficient systems for storing and classifying information. In commerce, Big Data is most often used to predict business development or test marketing hypotheses. For future analysis, the information needs to be collected, cleaned, arranged and labelled. Learn more about work with big data in detail here, and below we’ll discuss some basic techniques.

Collecting and integrating data

Working with big data often involves gathering dissimilar information from a variety of sources. To work with this data, you need to put it together. You cannot just load data into one database since different sources can produce it in different formats. This is where mixing and integrating data will help when patchy information is brought to a single form.

To bring data into a single format, they turn similar forms of data variables into one, like bringing the fields name female and women to one consistent name, and so on. Then the data is supplemented. If there are two sources of data about a single object, information from the first source is complemented with data from the second to get a complete picture. They also filter out redundant data, for example, when some data is missing in the source. Or collects unnecessary information that is not needed for analysis and just creating more complexity and increasing the computation time.

In cases where there are several various sources, data incorporation is applied. Suppose when your store uses multiple sales channels, like offline, through your website, and through the marketplace. The figures need to be unified and brought to a single DB to obtain complete information on sales figures and market demand. Traditional methods of data combination are, for the most part, ground on extraction, transformation, and loading (ETL). After integration, big data is subjected to further analysis and other manipulations. For instance: data is retrieved, cleaned and processed, placed in a corporate data warehouse, and then extracted for analysis.

Machine learning and neural networks

The method is based on empirical analysis of information and the subsequent construction of algorithms for self-learning systems. Machine learning solves the problem of finding patterns in data. Based on this data, the algorithm can make certain predictions. Machine learning can be classified as artificial intelligence methods since it does not solve a problem directly but learns to apply the solution to many similar problems. Some advanced methods of ML analysis are implemented with the help of neural networks. They consist of many artificial neurons, which, when trained, form connections and then can analyze information.

Neural networks apply a general algorithm, receiving data as input and running it through their neurons. At the output, the neural network gives a result. Quite often, the NN can find the pattern, which is not evident for the human analysis. For a neural network to work, it must be taught first. Usually, neural networks are used for those tasks that contain huge masses of data, as the method is one of the most expensive among many ML models.

Machine Learning technology frees the coder from explaining in detail to the machine how it should solve a problem. Instead, the computer is trained to find a solution on its own. The algorithm obtains a set of required data and then uses it for data processing. For example, machines learn to recognize images and classify them. They can recognize text, numbers, people, and landscapes. Computers identify the distinguishing features for sorting and take into account the context of their use.

It is quite natural that one should not expect an absolutely correct choice in all cases since mistakes happen. The correct and erroneous recognition results go into the database, thereby enabling the program to learn from its errors and better cope with the task at hand. In theory, the cultivation process can evolve indefinitely as this is the essence of the learning process. For example, when sorting data, say, images of male and female faces, etalon samples of faces are sent to the neural network, where clearly marked which are exactly male and which are uniquely female. So the neural network will understand by what criteria to distinguish faces, that is, it will learn how to do it.

Then test the neural network and send a new cleaned sample to it, but without specifying the affiliation of persons to a particular gender. This will help you understand the error rate of the neural network and the acceptability degree of such share of errors for you. If the share of erroneous decisions remained acceptable after training and tests, then you can process big data using a neural network.

Simulation modelling

Sometimes a situation arises in which you need to understand how some indicators will behave when others change. Could you suggest how will sales change if the price rises? Conducting such experiments, in reality, is inconvenient as it causes high costs and frequently leads to serious losses. In order not to experiment with a real business, you can build a simulation model.

For instance, if you want to understand the influence of different factors on sales. For this, data on sales, prices, number of customers, and everything else related to the store are used, and a model is built on the basis of this data. Then changes in prices, the number of sellers, and the flow of visitors are introduced into the model. All these changes affect other indicators, which makes it possible to choose the most successful innovations and implement them in real business.

With the help of simulation modelling, the future is predicted not from real data, but from hypothetical ones. A simulation model can be built without a limited data set, but the more data involved, the more accurate the model, since it takes into account more factors. It is important to remember that even in the scale model, not all factors are often taken into account. Therefore, modelling can give the wrong result, so it is necessary to implement the decision taking into account all the risks.

The method of simulation modelling is used in cases when it is necessary to test some hypotheses, and their testing on a real business is too expensive. For example, a large-scale price change over a long period of time can bring down a business, so it is best to test a model before making such a step.

Predictive analytics

Often it is necessary to make predictions about the future considering previously collected data. Say, when you want to determine sales figures for the next year based on the current data. Big data predictive analytics helps in such forecasts. The task of this method is to highlight several influencing parameters. Now such analytics applying in various fields. This method helps predict:

- company growth and financial output;

- sales and customer behaviour on the market;

- banking and insurance fraud;

- forecasting the market development

Often manufacturing enterprises are implementing industrial IoT platforms with data-collecting sensors sets on the operation of equipment. After that, analytics systems can predict breakdowns and maintenance times. For this, machine learning platforms are also used. These IoT platforms are often deployed in the cloud to reduce the cost of developing, managing, and operating IoT solutions.

Crowdsourcing

Usually, computers are involved in Big Data analysis, but sometimes people can do it as well. For these purposes, there is crowdsourcing, which is the involvement of a large group of people to solve any problem. This method of processing big data is good where the task is one-time, and there is no point in developing a complex artificial intelligence system to solve it. In cases where you need to analyze big data regularly, a system with machine learning modelling is likely to be cheaper than crowdsourcing.

Be at the forefront

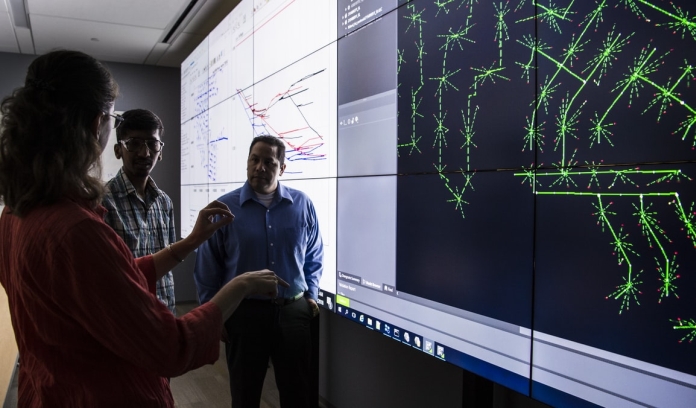

In popular culture, artificial intelligence is shrouded in myth and is often considered a dangerous and exceptional force. In fact, it is similar to any other technology. As more companies use AI, competition increases and costs drop – artificial intelligence is becoming available to a wide range of organizations via such solutions as no-code and low-code ML.

Nowadays, it’s becoming difficult to compete with the companies, which utilize these AI technologies. To gain the leading positions on any market, it is important to take advantage of this technology as early as possible.